AI-Powered QA: Can You Really Trust Machines to Test Your App?

.png)

Every successful app stands on the foundation of strong testing. Without reliable quality assurance, even the best product ideas can fail once they reach users. Testing ensures performance, security, and usability, making it the backbone of software quality.

Now, a new chapter is unfolding with AI-powered QA. Instead of relying only on repetitive manual checks or traditional automation, teams are beginning to use artificial intelligence to speed up processes, predict issues, and adapt test coverage in real time. For startups, enterprises, and product teams, this shift promises faster releases and stronger reliability.

But an important question remains: Can machines be trusted to test your app? That is what this blog unpacks, looking at both the opportunities and the challenges that come with relying on AI in software testing, and how an AI development company can guide businesses in adopting this technology effectively.

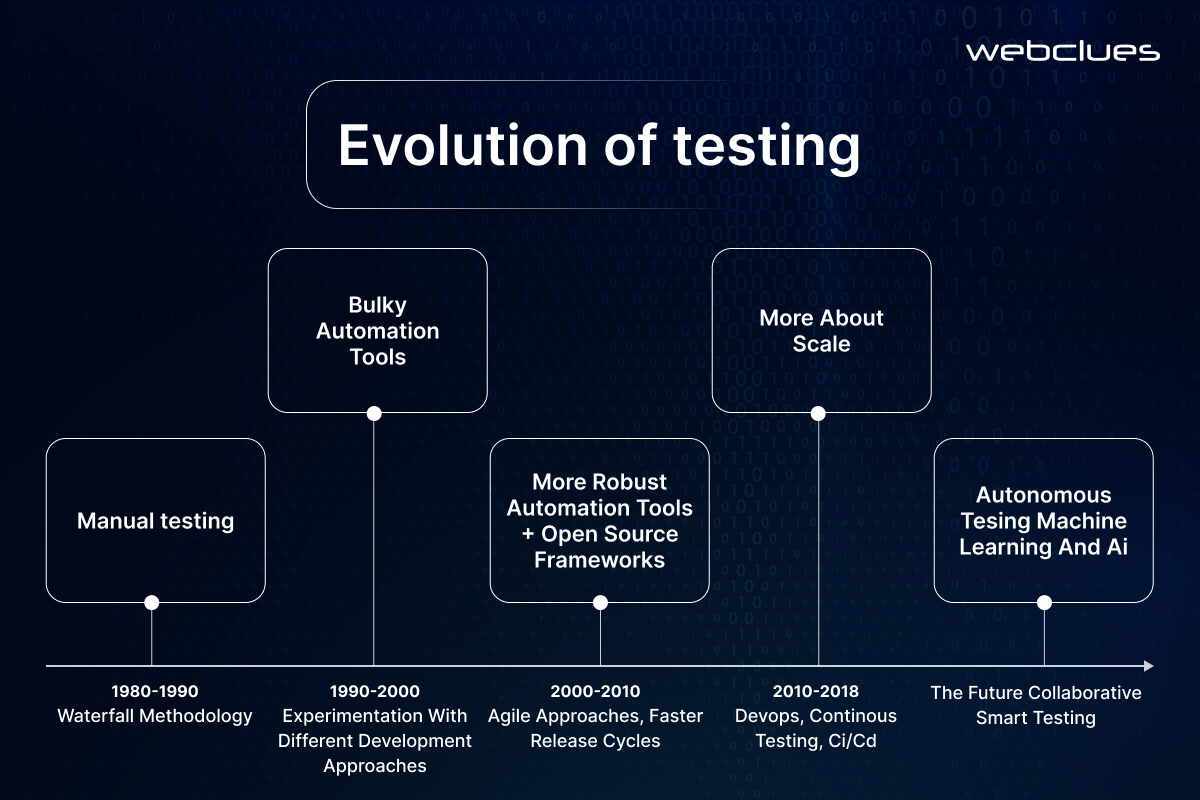

The Advent of AI in Software Testing

Quality assurance has always relied on systematic checks, but the scale and speed of modern applications demand something more advanced. This is where AI in software testing has stepped in. By analyzing vast amounts of test data and learning from previous results, AI systems can now detect defects before they surface, generate new test cases automatically, and even adapt when the application changes.

One of the most notable breakthroughs is the concept of self-healing test scripts. Traditional automated scripts often break when a small change is introduced in the code or user interface. With AI test automation, the system can recognize the shift, adjust the script on its own, and continue running without interruption. This prevents delays and reduces the hours teams spend fixing broken scripts.

The benefits are clear. Tests execute faster, coverage expands across more user scenarios, and the risk of human error falls significantly. For engineering teams, this means less time spent on repetitive tasks and more time focused on building features that matter to users. For businesses, it means higher confidence in every release and the ability to deliver updates at a pace that keeps them competitive.

How AI Test Automation Works

At the core of AI test automation are machine learning models trained to process large sets of test data. These models learn patterns from past results and can predict where defects are most likely to appear. By scanning new code against historical outcomes, they flag anomalies that might signal potential bugs.

Beyond defect detection, AI helps in creating tests themselves. Instead of engineers manually writing scripts for every case, systems can generate automated tests based on application behavior and previous failures. This shortens preparation time and ensures broader coverage across different use scenarios.

A key capability is anomaly detection. If a performance test shows unusual behavior or if a regression test produces unexpected outcomes, the system highlights these for closer review. In functional testing, AI can simulate user flows and quickly validate whether features work as intended. In regression testing, it checks that recent changes have not broken existing functionality. In performance testing, it observes response times and scalability under load while identifying irregular patterns.

The combination of automated generation, self-adjusting scripts, and continuous monitoring demonstrates the strength of machine learning in QA. It is not about replacing skilled testers but about giving them sharper tools that work at a scale and speed humans alone cannot manage.

What Is the Difference Between AI and Manual Testing?

Manual testing has long been the standard approach to validating software. It relies on human testers applying their knowledge, intuition, and creativity to explore applications and identify issues. This method brings context and flexibility, as testers can adapt quickly to unexpected scenarios or think beyond predefined cases.

On the other hand, AI vs manual testing is not about one replacing the other but about how each contributes differently. With machine learning in QA, automation can run thousands of test cases quickly, cover a wider range of user paths, and process results at a scale far beyond human capacity. Where humans may overlook repetitive details, AI systems thrive on consistency and speed.

The real value comes when both approaches are combined. Human testers bring judgment and insight, while AI provides efficiency and reach. Together, they create a testing strategy that balances depth with scale.

| Aspect | Manual Testing | AI-Driven Automation |

| Strengths | Context, creativity, intuition | Speed, coverage, scalability |

| Best Use Cases | Exploratory testing, usability checks | Regression testing, performance validation |

| Weaknesses | Time-consuming, prone to human error | Limited judgment, depends on data quality |

| Outcome | Deeper user insight, nuanced feedback | Faster cycles, consistent large-scale checks |

Can AI Replace Manual Testing?

The question of “Can AI replace manual testing?” is one that often comes up when teams explore automation. The short answer is no. While AI-powered QA has transformed testing by increasing speed and coverage, it cannot fully replace the depth of human insight.

AI can run thousands of regression checks, detect anomalies, and generate scripts that adjust themselves as applications evolve. These abilities make it a powerful ally. However, testing is not only about execution. It involves judgment, intuition, and understanding the nuances of user behavior. Machines cannot replicate that context.

Human oversight remains essential. AI may highlight a pattern that looks unusual, but only a skilled tester can determine whether it is a real problem or an acceptable variation. Without human validation, teams risk trusting false positives or missing subtle usability issues.

The real value lies in combining both. AI handles scale, consistency, and repetitive checks, while testers focus on creative exploration and complex decision-making. Together, they provide a balanced approach to quality that neither could achieve alone.

How Accurate Are AI Testing Tools?

Accuracy is often the biggest concern when teams adopt AI testing tools. While the technology is powerful, it is not without limitations. Systems can produce false positives, flagging issues that are not actual defects. They are also dependent on the quality of their training data. If the models learn from incomplete or biased datasets, the results can be misleading. Edge cases add another layer of complexity, as unusual user paths or rare conditions may not be handled well by automation.

So, should I trust AI to test my app? The answer is not absolute. These tools can be trusted to handle large-scale regression tests, performance checks, and repetitive validations with impressive speed. They reduce the chance of human error and free testers from routine tasks. At the same time, trust cannot mean blind reliance. Results need review, and human testers must validate outcomes that fall outside expected patterns.

The right approach is to view accuracy as a shared responsibility. How accurate are AI testing tools? Accurate enough to strengthen your QA strategy when used wisely, but not perfect enough to work without oversight. Trust in this context means using AI for what it does best, while keeping skilled testers in the loop to make final judgments.

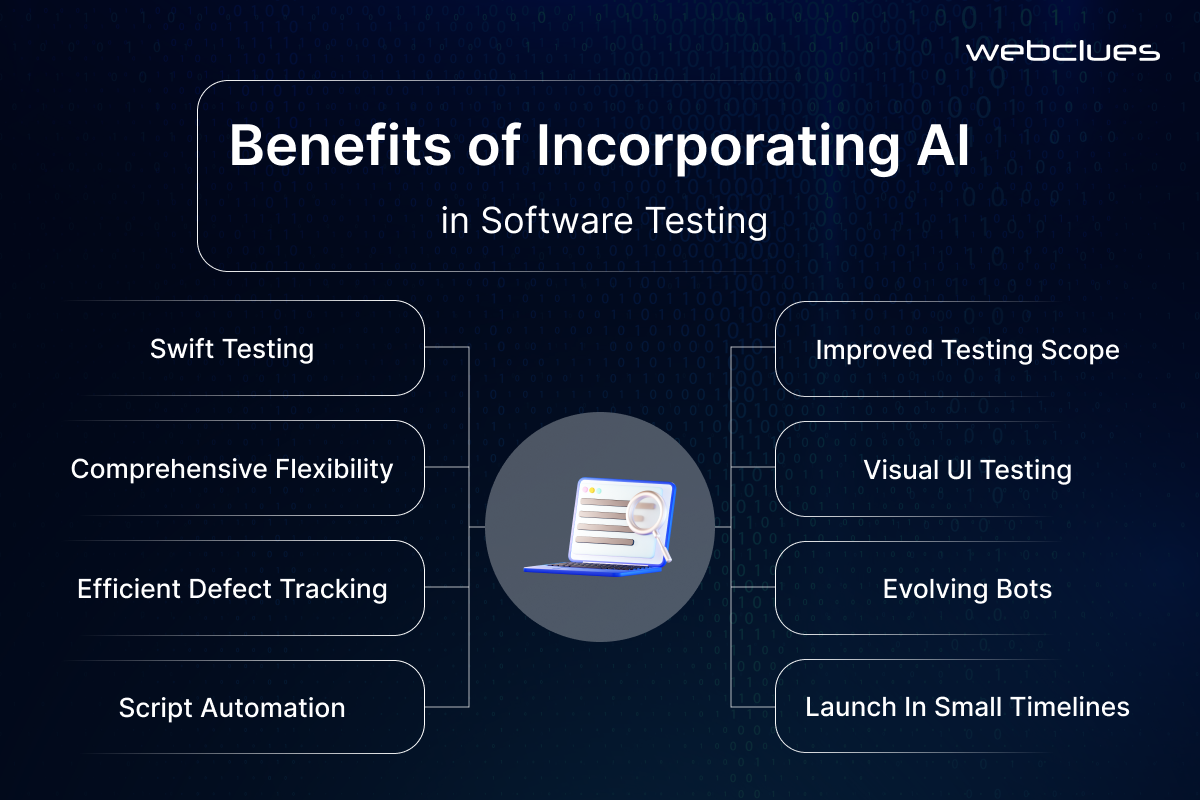

Benefits for Businesses and Teams

The practical impact of AI in software testing is most visible when it comes to business outcomes. Faster release cycles are one of the biggest advantages. Automated systems powered by machine learning can run tests continuously, cutting down the time needed to validate new features and updates. This allows teams to ship improvements more often without sacrificing reliability.

Another benefit is stronger defect prediction. By analyzing patterns in past failures, AI can forecast where issues are most likely to appear and direct attention to those areas. This proactive approach reduces the number of bugs that make it into production and improves overall product stability.

Cost reduction is also a natural result. By automating repetitive checks and maintaining scripts that heal themselves, companies save both time and resources. Testers spend less effort fixing broken scripts or rerunning lengthy manual processes and can focus instead on strategic improvements.

For startups, this means gaining the ability to compete with larger players by moving faster and delivering polished products without needing a large QA team. For enterprises, it means scaling quality assurance across complex systems without dramatically increasing cost. In both cases, working with providers of AI development services can help teams implement these solutions in a way that fits their existing workflows and goals.

Challenges of AI-Powered QA

While the promise of AI-powered QA is significant, adopting it is not without challenges. One of the most common hurdles is the skill gap. Teams that are used to traditional testing often need new training to understand how to work alongside AI-driven systems. Without this knowledge, the technology can end up underutilized.

Integration costs are another factor. Setting up infrastructure, licensing tools, and aligning them with existing pipelines can be expensive at the start. There is also the complexity of the initial setup. Models need proper data to learn from, and automation frameworks must be configured carefully to deliver accurate results.

Perhaps the biggest risk is over-reliance. Without human validation, teams may accept test outcomes at face value, which can lead to undetected usability issues or false confidence in results. Recognizing the challenges of AI in QA means understanding that AI is a strong enabler but not a replacement for thoughtful oversight.

How to Get Started with AI in QA

For teams ready to adopt AI in their testing workflows, a step-by-step approach helps reduce friction and ensures smoother results.

1. Identify test areas for automation

Begin by pinpointing repetitive and time-intensive tasks such as regression checks or performance validation. These are well-suited for automation and can deliver quick wins.

2. Choose AI testing tools

Evaluate tools that align with your application stack and project needs. Look for solutions that provide test generation, anomaly detection, and self-healing capabilities.

3. Train teams

Provide testers and developers with the knowledge to work with these tools effectively. This ensures the technology is used to its full potential rather than treated as a black box.

4.Start with a hybrid AI + manual approach

Combine automation with human oversight. Let AI handle scale and speed, while testers validate results and focus on exploratory testing and user experience.

Working with an experienced provider of AI development services or an AI development company can make this process easier. They bring expertise in selecting the right tools, integrating them with existing workflows, and training teams to work with AI effectively. This guidance helps businesses avoid missteps and achieve real value faster.

Conclusion

So, can machines be trusted to test your app? The answer is yes, but only with oversight. AI-powered QA delivers speed, coverage, and consistency at a scale that manual testing alone cannot achieve. Yet, it is not a replacement for human judgment. True reliability comes when automation handles the heavy lifting and skilled testers validate the results, delivering both accuracy and usability.

For businesses, this balance is where the real value lies. By adopting AI development services, you can modernize your QA processes, cut down release cycles, and improve overall product quality without losing the critical perspective of human testers.

If you are looking to make this shift, partnering with an experienced AI development company like WebClues can help. From selecting the right tools to integrating them with your workflows, WebClues can guide your team in building a testing strategy that combines the strength of automation with the assurance of human expertise. Connect with us today for a free consultation.

Build Your Agile Team

Hire Skilled Developer From Us

Integrate AI-powered QA with WebClues to accelerate testing, improve defect prediction, and reduce costs.

Our experts help businesses adopt AI-driven test automation that scales efficiently. With deep experience in ML models for QA, we facilitate faster releases, stronger accuracy, and improved reliability across your software products.

Get a Quote!Our Recent Blogs

Sharing knowledge helps us grow, stay motivated and stay on-track with frontier technological and design concepts. Developers and business innovators, customers and employees - our events are all about you.