How Enterprises Are Building Private AI Clouds in 2025

.jpg)

In 2025, the conversation around enterprise AI is shifting rapidly, and private AI clouds are leading the way. As companies double down on building their own AI capabilities, relying entirely on public cloud providers no longer offers the control, security, or long-term scalability they need.

Instead, enterprises are moving toward private AI cloud solutions, creating dedicated environments to host, train, and fine-tune their AI models on their own terms. This shift is not just about better performance. It is about owning critical infrastructure in an AI-driven economy.

At the heart of this movement is the rise of self-hosted LLM models and enterprise AI hosting, where businesses are building personalized ecosystems to run everything from private GPTs to proprietary generative AI workflows. To make this leap effectively, many are working closely with trusted partners specializing in AI development services. Companies like WebClues Infotech understand the demands of modern enterprise AI and help bring these private clouds to life.

Why Enterprises Are Prioritizing Private AI Cloud Infrastructure in 2025

The move toward private AI cloud infrastructure is not just a trend. It is quickly becoming a necessity for enterprises that want to stay competitive, compliant, and cost-effective in 2025.

One of the biggest drivers is the growing pressure around data privacy and regulatory compliance. Frameworks like GDPR in Europe and HIPAA in the United States have made it clear that sensitive data cannot be casually handed over to third-party AI services. Enterprises are realizing that building private AI infrastructure gives them full control over how their data is used, stored, and protected.

There is also the question of long-term cost efficiency. While public LLM APIs initially seem cheaper, the costs of scaling usage can escalate quickly, especially for companies training or fine-tuning models regularly. Hosting models internally through enterprise AI hosting or self-hosted LLMs offers a far more sustainable and predictable cost structure at scale.

Another key reason enterprises are embracing private AI clouds is the ability to customize models using proprietary datasets. Public cloud AI services are built for broad applications, but enterprises often need specialized models that understand their unique context. LLM self-hosting provides the flexibility to train and refine models without external limitations.

Finally, there is a growing need for AI autonomy. Companies want to reduce reliance on third-party providers and integrate AI closer to their edge systems for faster inference and tighter data security. By building private AI clouds, enterprises can extend their AI capabilities wherever they need them, whether on-premises or across multi-cloud setups.

According to a 2025 report by Gartner, over 65 percent of enterprises building AI models now prefer private or hybrid cloud environments over public cloud-only strategies. This signals a clear shift toward owning the infrastructure that drives future innovation.

Key Components of a Private AI Cloud Setup

Setting up a private AI cloud is more than just moving models onto a server. It requires careful planning across infrastructure, security, and scalability. Enterprises investing in private AI cloud solutions in 2025 focus on three major components that form the backbone of building private AI infrastructure.

On-Premises AI Hosting

One of the first decisions enterprises face is whether to run their AI workloads fully on-premises or adopt a hybrid approach. On-premises AI hosting gives companies full ownership and control over their hardware, networking, and security layers. This setup is ideal for industries with strict compliance needs, such as healthcare, finance, and defense.

For others, a hybrid model works better. In a hybrid setup, critical data and models are hosted internally, while less sensitive workloads can be distributed across public cloud platforms. Choosing between full on-premises or hybrid hosting depends on the level of sensitivity, performance needs, and cost considerations of each enterprise.

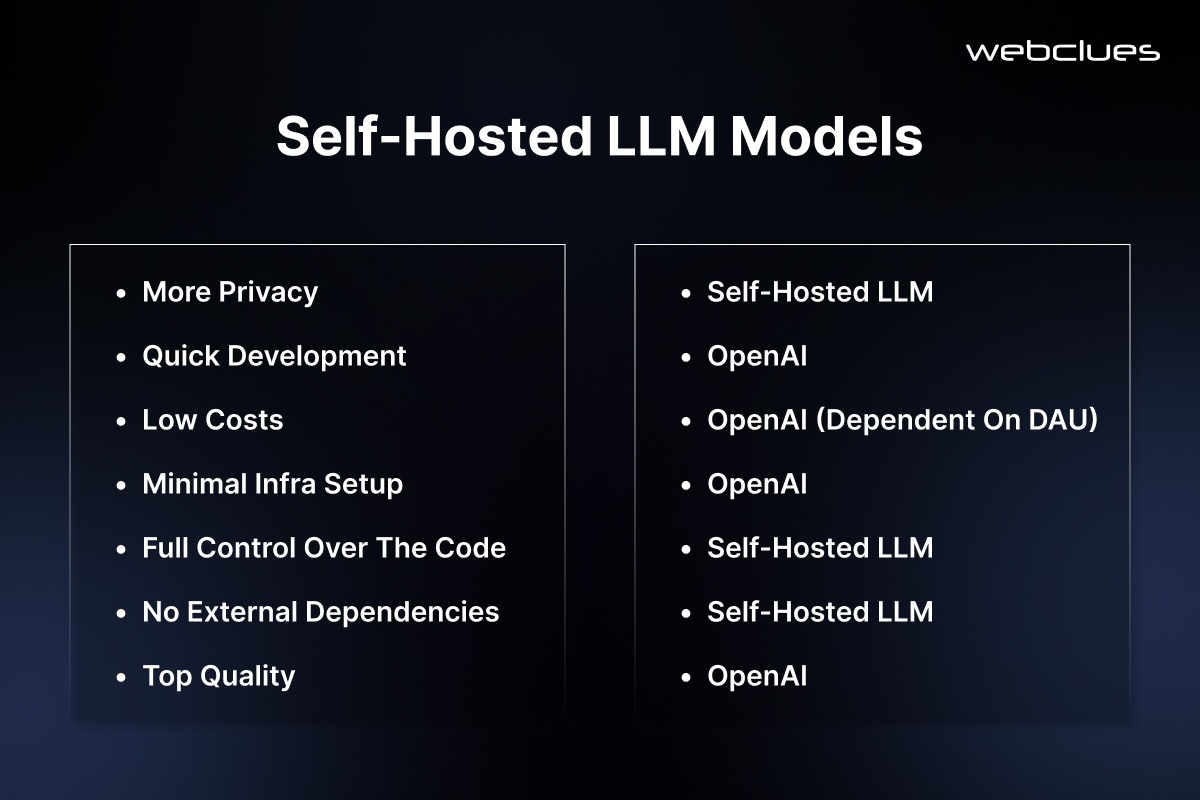

Self-Hosted LLM Models

As enterprises aim to control and customize their AI capabilities, self-hosted LLM models are becoming a standard. Rather than relying on third-party APIs like OpenAI’s GPT, companies are choosing to host models in their own environments. This approach ensures better data privacy, greater fine-tuning flexibility, and full control over inference behavior.

In 2025, several open-source and commercially available LLMs are making LLM self-hosting even more viable. Popular choices include Mistral, LLaMA 3, and Phi-3, each offering different strengths depending on use cases like chatbots, document summarization, and private enterprise knowledge bases. With the right hosting environment, enterprises can deploy these models and continuously improve them based on their proprietary datasets without external dependency.

Secure AI Cloud Hosting

Security remains the most critical pillar of any private AI cloud deployment. Secure AI cloud hosting ensures that models, training data, and inference activities are fully protected against unauthorized access or leaks.

This involves multiple layers of encryption, both in transit and at rest. Enterprises also design strict access controls around their AI environments, ensuring that only authorized personnel and applications can interact with the models. Private data training processes, often run in isolated environments, are increasingly standard to maintain data integrity. Building a secure foundation is not just about compliance. It is about maintaining customer trust and protecting the intellectual property that powers next-generation AI capabilities.

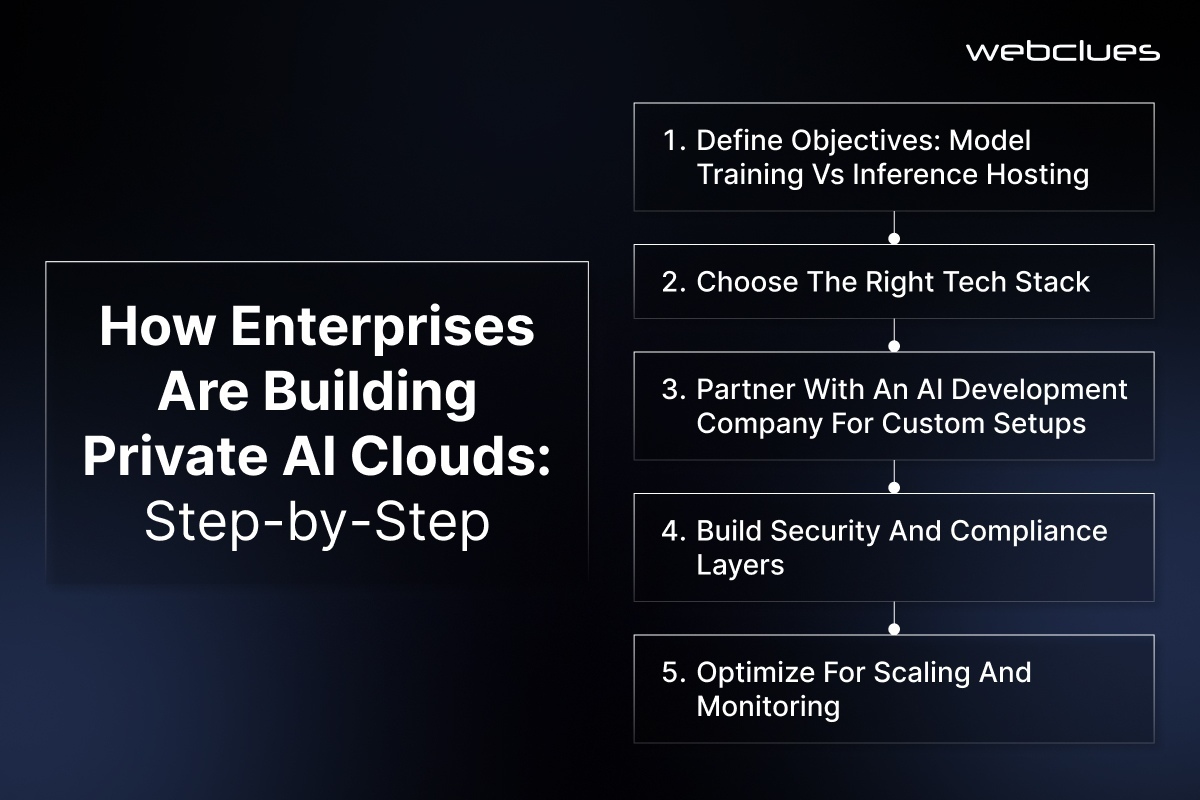

How Enterprises Are Building Private AI Clouds: Step-by-Step

Building a private AI cloud is not a one-size-fits-all project. Every enterprise needs a clear strategy that fits its goals, resources, and long-term AI vision. Here is a look at the essential steps companies are following when building private AI infrastructure in 2025.

1. Define Objectives: Model Training Vs Inference Hosting

The first step is setting clear goals. Some enterprises aim to train large language models from scratch or fine-tune existing models with their proprietary data. Others focus only on hosting models for inference, where performance, reliability, and low latency are the priorities. Defining the objective shapes every decision that follows, from hardware selection to software frameworks.

2. Choose the Right Tech Stack

Once the objectives are clear, enterprises move to selecting the right tech stack. For those aiming to deploy private GPT models or other generative AI systems, open-source LLMs like Mistral, LLaMA 3, or Phi-3 often form the foundation. Alongside the models, companies set up supporting components like vector databases for retrieval augmented generation (RAG), distributed training platforms, and scalable cloud infrastructure that can grow with demand.

3. Partner with an AI Development Company for Custom Setups

Given the complexity involved, many enterprises choose to work with a trusted AI development company. A good partner brings deep experience in setting up self-hosted LLMs, building secure AI environments, and optimizing for both performance and cost. Partnering with the right team ensures that custom requirements, from unique data pipelines to advanced monitoring needs, are built into the system from day one.

4. Build Security and Compliance Layers

Security cannot be an afterthought when building private AI clouds. Enterprises need to incorporate encryption protocols, fine-grained access controls, audit logging, and regulatory compliance measures like GDPR or HIPAA alignment from the start. The goal is to create a secure environment where both data and models are protected without compromising usability.

5. Optimize for Scaling and Monitoring

Finally, successful private AI clouds are designed to evolve. Enterprises set up monitoring tools to track resource usage, model performance, and system health. They also plan for horizontal scaling, allowing them to expand compute resources or add new models without major disruptions. Long-term success depends not just on the initial setup but on continuous optimization and proactive maintenance.

Building a private AI cloud may seem complex, but with the right strategy and the right team, it becomes a powerful foundation for enterprise AI innovation.

At WebClues Infotech, we help enterprises build, scale, and secure private AI clouds customized for 2025 and beyond.

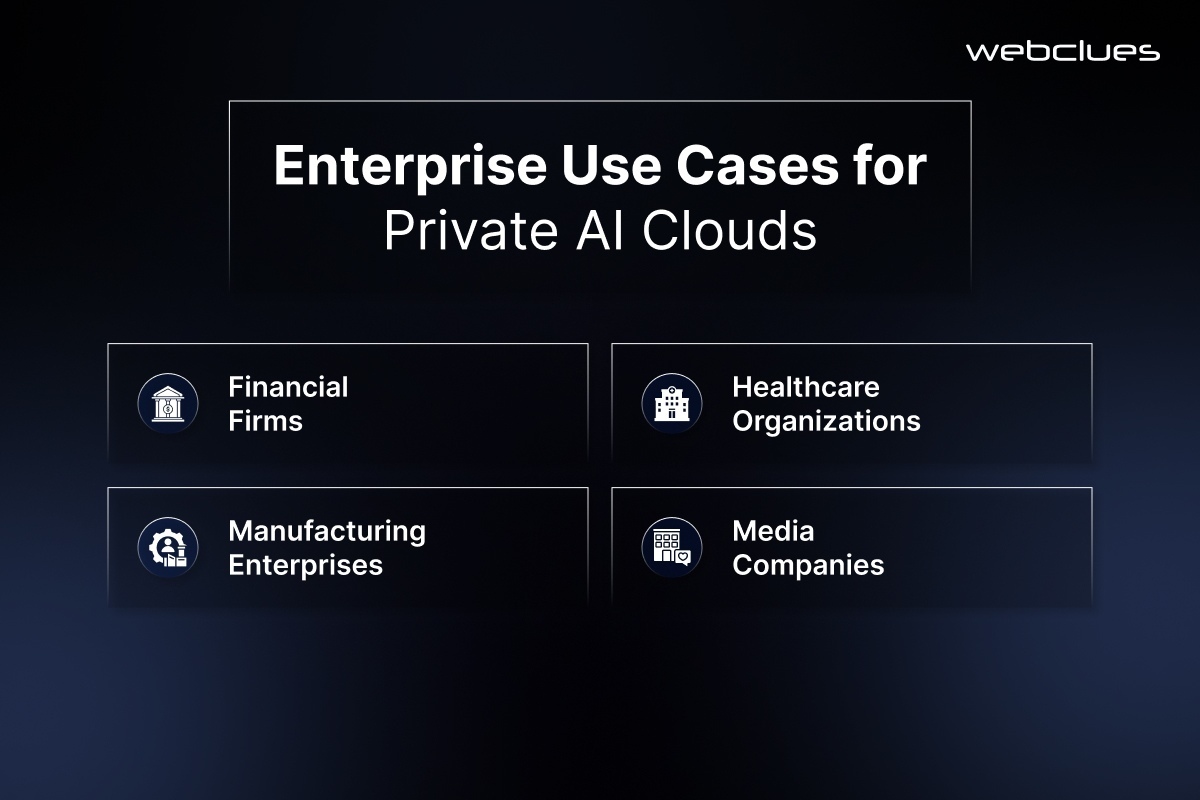

Enterprise Use Cases for Private AI Clouds

The demand for AI cloud services for enterprises is not just theoretical. Across industries, real-world use cases are showing how private AI clouds are driving innovation while keeping control firmly in the hands of businesses.

Financial Firms Training Private LLMs for Confidential Analytics

In finance, private AI clouds allow firms to train custom language models on sensitive transaction data without risking exposure. Banks and investment firms are using private cloud for generative AI to build fraud detection systems, risk analysis tools, and even personalized client advisory services. These models operate within secured environments, ensuring that client data stays private and regulatory requirements are met.

Healthcare Companies Managing Patient Data Securely

Healthcare organizations are among the earliest adopters of private AI infrastructure. Hospitals and research centers are using AI cloud services for enterprises to manage electronic health records, medical imaging datasets, and clinical trial information securely. By hosting AI models privately, they can develop diagnostic tools and patient monitoring systems that are HIPAA-compliant and shielded from third-party exposure.

Manufacturing Enterprises Building Predictive Maintenance AI Privately

In manufacturing, predictive maintenance powered by AI is helping companies avoid costly downtimes. Enterprises are training private models on sensor data from machinery to forecast failures and schedule maintenance efficiently. Hosting these systems internally not only protects sensitive operational data but also enables real-time edge deployments, where speed and reliability are critical.

Media Companies Training Niche Content Models

Media and publishing companies are tapping into private cloud for generative AI to create niche content generation models. Whether it is for automatic summarization of legal documents, personalized newsfeeds, or content moderation tools, having private, fine-tuned AI models allows for better quality control and brand alignment. These enterprises value the ability to customize models without depending on general-purpose APIs that may not understand the nuances of their content strategies.

Common Challenges Enterprises Face in Private AI Cloud Deployment

While the benefits of building private AI infrastructure are clear, the journey is not without its challenges. Many enterprises face real-world hurdles when trying to deploy private AI environments at scale.

High Upfront Costs

Setting up a private AI cloud demands significant initial investment. From powerful hardware to specialized software and security layers, the costs can add up quickly. Enterprises need to carefully balance the immediate infrastructure expenses with the long-term value and control that private AI systems provide.

Talent Shortage for AI Infrastructure Engineering

Another major barrier is the shortage of skilled professionals who understand AI infrastructure deeply. Building and managing a private AI cloud requires expertise across multiple domains, including cloud engineering, machine learning operations, cybersecurity, and network management. Many companies struggle to find or retain talent with the right mix of skills, making it harder to move projects forward efficiently.

Complex Security Management

Security is at the core of any AI data security private cloud deployment. Enterprises need to not only encrypt data and models but also design access controls, monitor threats, and ensure ongoing compliance with evolving regulations. Managing this complexity without dedicated security expertise can create vulnerabilities that undermine the very purpose of building a private AI cloud.

The Way Forward - Hiring the Right Partners

To overcome these challenges, many enterprises are choosing to hire AI developers with specialized infrastructure experience or partnering with trusted AI development services providers. A capable partner brings the technical depth, hands-on experience, and long-term support needed to set up private AI clouds that are secure, scalable, and ready for future growth.

How to Choose the Right AI Development Company for Private AI Cloud Projects

Selecting the right partner is one of the most important steps in building a successful private AI cloud. Not every provider offering AI development services has the deep technical experience required for infrastructure-heavy enterprise projects. Choosing wisely can make the difference between a secure, scalable deployment and an unstable setup that struggles to grow.

What Expertise to Look For

When looking to hire AI developers or partner with an AI development company, expertise in a few key areas becomes critical. Your partner should have hands-on experience in LLM customization, including fine-tuning large language models for specific enterprise use cases. They should also have proven capabilities in cloud security, understanding how to encrypt data, manage secure access, and monitor threats in real-time. Scalability expertise is equally important. Private AI clouds must be built to evolve as models grow larger and data volumes increase.

Importance of Hands-On AI Infrastructure Experience

Building private AI infrastructure goes beyond writing code or developing models. It demands hands-on experience with hardware configurations, distributed computing, storage optimization, and secure networking. Look for teams that have managed real-world deployments of self-hosted LLMs, multi-node training environments, and AI-driven cloud ecosystems. The more practical experience your partner has, the fewer unknowns you will face during and after deployment.

Checklist Before Finalizing an AI Development Partner

Before you finalize an AI development partner, here is a simple checklist to guide your decision:

- Proven experience with self-hosted LLM deployments

- Expertise in private cloud and hybrid cloud setups

- Strong focus on AI security best practices

- Ability to scale infrastructure for future needs

- Transparent communication and long-term support commitment

- Customization capabilities based on your specific industry requirements

At WebClues Infotech, we specialize in end-to-end enterprise AI development services, including private AI cloud deployments. From initial architecture design to ongoing scaling and optimization, our team is built to help enterprises unlock full control over their AI initiatives.

Private AI Cloud Is No Longer Optional for Enterprises in 2025

The shift toward private AI clouds is no longer a nice-to-have strategy. It is a necessary move for enterprises that want to secure their data, control their AI evolution, and reduce long-term operational risks. In 2025, building private AI infrastructure is not just about following market trends. It is about setting up a foundation that gives enterprises full ownership over their AI capabilities.

Companies that invest in owning and managing their AI environments today will be the ones leading innovation tomorrow. They will move faster, adapt models to their needs more easily, and protect their most valuable asset — their data — with complete confidence.

Planning to build your private AI cloud? Hire expert AI developers from WebClues Infotech to make it happen. Our team is ready to help you design, build, and scale private AI infrastructures that are secure, scalable, and ready for the future.

Build Your Agile Team

Hire Skilled Developer From Us

Deploy private AI infrastructure with full control over LLM hosting, compliance, and enterprise-grade performance.

WebClues Infotech offers AI development services to help enterprises self-host LLMs, manage secure training pipelines, and scale internal AI systems across departments without relying on public API restrictions or third-party exposure.

Contact Today!Our Recent Blogs

Sharing knowledge helps us grow, stay motivated and stay on-track with frontier technological and design concepts. Developers and business innovators, customers and employees - our events are all about you.