The Future of Cloud Computing: What to Expect in 2025 and Beyond

Cloud computing is a game-changing technology that improves efficiency, innovation, and collaboration in the 21st century. However, cloud computing is not a stable phenomenon, but rather a dynamic and developing one that adjusts to new challenges and opportunities. So, what will the future of cloud computing bring in 2025 and beyond? What are the main trends and projections that cloud computing service providers will adhere to and determine the cloud industry and its impacts on businesses and individuals? Through this blog, we will not only look into some of the essential aspects of the future of cloud computing but also evaluate how these developments will influence various sectors and areas.

Current Trends and Challenges

- AI and ML

Artificial intelligence and machine learning are constantly improving cloud computing, as they provide new skills and data for cloud services and applications. Google Cloud reports that AI and ML were among the most popular cloud computing skills for 2023, and 44% of organizations adopted the latest cloud products as soon as they were released, such as Google’s AutoML and TensorFlow. However, AI and ML also pose some ethical and technical challenges, such as data quality, bias, privacy, and security.

- Security

Cloud computing faces a critical and continuous challenge of security, as cloud users and providers are vulnerable to cyberattacks, data breaches, and compliance issues that can cause serious damages and expenses. According to Pluralsight, security was the key cloud computing skill that organizations needed to boost in 2023, and 64% of leaders claimed that security was the major hurdle for cloud adoption.

- Sustainability

Sustainability is another key factor of cloud computing, as it influences the environmental and social impact of cloud operations and consumption. Datamation reports that cloud computing can lower greenhouse gas emissions by 59% compared to traditional data centers, and cloud providers are adopting renewable energy sources and carbon-neutral initiatives to power their cloud infrastructure. Cloud service providers are the ones who deliver these cloud computing services to customers, and they also help the environment and society by minimizing their environmental impact.

Main Trends and Innovations Shaping the Future of Cloud Computing

Trend 1- The Move to Ubiquitous and Heterogeneous Computing

Technology is changing and moving towards ubiquitous and heterogeneous computing. This trend means a big change in the computing systems world, highlighting their common presence and different architectures.

Ubiquitous computing means that computing devices and capabilities are everywhere in our daily lives. Desktops and laptops are replaced by a smooth integration of computing power into everyday things, places, and actions. From smart homes and wearable devices to industrial machinery, computing resources are widespread, forming a connected network of smart systems.

At the same time, the growing variety of hardware architectures used in computing systems is highlighted by heterogeneous computing. Modern computing environments combine different kinds of processors, accelerators, and specialized hardware components, unlike the past where only one type of processor was prevalent. This diversity is driven by the need for better performance in certain tasks, such as artificial intelligence, graphics rendering, or data processing.

The demand for improved efficiency, flexibility, and optimization for specific tasks drives the move to ubiquitous and heterogeneous computing. By spreading computing capabilities across various devices and using diverse architectures, this trend offers more powerful and personalized solutions that match the evolving demands of users and industries.

How it will Affect the Cloud Computing Landscape?

- Distributed Cloud Architectures

As computing devices and edge computing solutions become more varied, distributed cloud architectures will become more important. Cloud service providers will likely add edge nodes to their infrastructure, which will bring them closer to end-users and lower latency. This will make their services more adaptable to the different computing needs of widespread devices and diverse architectures.

- Adaption to Heterogeneous Workloads

Heterogeneous computing requires cloud platforms to adapt to various workloads. Cloud providers may offer customized instances for tasks like machine learning, graphics processing, and data analytics. This improves performance and efficiency for users with diverse applications.

- Enhanced Hybrid Cloud Models

As computing becomes more ubiquitous and heterogeneous, it is expected to lead to the development of hybrid cloud models. A mix of on-site infrastructure, edge computing resources, and traditional cloud services will be used to create a complete computing environment. To help with the integration and operation of these hybrid environments, cloud providers will likely offer effortless orchestration and management tools.

- Security and Privacy Consideration

As computing resources are deployed in various environments, security and privacy become more challenging. Cloud providers will have to improve their security measures to deal with possible risks related to edge devices and different architectures. There may also be a need for secure and privacy-friendly solutions to fit the changing regulations. Google cloud-based services are one of the options that can offer these solutions, as they have advanced security features, compliance certifications, and data protection tools.

How businesses and users can leverage this trend?

- Task-Specific Workload Optimization

The heterogeneous computing trend allows businesses to select cloud instances that are best suited for their tasks. This way, they can boost their performance and resources efficiently by matching their workload requirements.

- Decentralized Data Processing

Transferring large volumes of data to centralized cloud data centers can be avoided by assigning data processing tasks to a network of edge devices. This reduces latency and improves bandwidth efficiency. For example, in video or sensor data analysis, businesses can process data at the edge first and then upload only relevant information to the cloud.

- Enhanced Security through Edge Computing

By using edge computing for some security-critical tasks, businesses can improve security. For instance, intrusion detection systems or threat analysis at the edge can help find and deal with security incidents quickly, lowering the risk of potential threats before data gets to the central cloud infrastructure.

- Adaptive Resource Allocation

Users can enjoy optimal performance and cost efficiency with automated scaling and resource allocation, thanks to adaptive resource allocation mechanisms that cloud providers may implement. These mechanisms can change computing resources according to workload features. This is very useful for applications with changing resource requirements, such as e-commerce platforms during busy shopping seasons.

- Context-Aware Applications

By using data from ubiquitous computing devices, businesses can create context-aware applications that personalize user experience. For example, using location data from mobile devices, retail applications can improve user engagement and satisfaction by providing location-based promotions or recommendations.

- Energy-Efficient Computing

Heterogeneous computing allows businesses to optimize energy consumption by selecting hardware components that are optimized for energy efficiency. This is important for industries that focus on sustainability, such as smart cities or green data centers. Businesses can also lower their capital and operational costs, as well as boost their scalability and performance, by opting for cloud computing providers that support heterogeneous computing.

Trend 2- The Rise of Generative Artificial Intelligence (generative AI) Supporting Infrastructure

A key advancement in the field of AI is generative AI, defined by its ability to create content and solutions by itself. This trend reveals the growing importance of infrastructure that is specifically built to support and utilize the capabilities of generative artificial intelligence.

The rise of generative artificial intelligence-supporting infrastructure is indicated by the development of specialized hardware, software frameworks, and cloud-based platforms that optimize the efficiency and scalability of generative AI models. Hardware innovations, such as powerful GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units), help the quick processing and training of complex generative AI models. Likewise, software frameworks like TensorFlow and PyTorch offer the necessary tools for developers to apply and adjust generative algorithms.

By making generative AI capabilities more accessible, cloud-based platforms allow businesses and researchers to utilize these powerful tools without the need for extensive computing resources. The growth of generative AI-supporting infrastructure is not only making generative AI more accessible but also encouraging innovation across industries, from creative content generation to solving intricate problems in fields like healthcare and finance.

How This Trend Will Transform Cloud Computing Services and Capabilities

- Increased Demand for Computational Resources

To train and run generative AI applications, a lot of computing power is needed. This means that more high-performance computing resources will be required, and cloud providers will play a key role in providing them. Cloud computing services will have to provide flexible and strong infrastructure, such as GPUs and TPUs, that can support generative AI applications.

- Specialized generative AI Hosting Solutions

Cloud providers could offer specialized hosting solutions that are designed to run generative AI models efficiently. These solutions might have pre-configured environments with the necessary software frameworks, libraries, and hardware accelerators. This would make it easier for businesses and researchers to deploy generative AI applications, creating a more accessible and user-friendly landscape.

- Integration with Cloud-based AI Services

Generative AI capabilities could be added to the existing AI services of cloud computing platforms. This might include APIs and services that allow developers to utilize pre-trained generative models for specific tasks, like producing content, translating, or problem-solving. By easily adding generative AI into their offerings, cloud providers can improve the versatility of their AI services.

- Resource-efficient Training and Inference

Developing technologies that improve the resource efficiency of training and inference processes for generative AI models could be a focus for cloud providers. This could include innovations in distributed computing, model parallelism,

and federated learning, allowing organizations to train and deploy generative AI models more efficiently on cloud infrastructure.

- Security and Privacy Factors

Generative AI’s transformative impact on cloud computing also creates security and privacy problems. Cloud providers will have to adopt strong security measures to safeguard sensitive data used in training generative AI models. Moreover, privacy-preserving techniques, like federated learning and differential privacy, may be essential to generative AI applications running on cloud platforms.

- Cost Management and Affordability

As generative AI becomes more widespread, cloud providers will likely focus on optimizing costs linked to training and running generative models. This optimization may include introducing pricing models tailored to generative AI workloads, incentivizing efficient resource utilization, and discovering cost-effective hardware solutions. By opting for cloud-based service providers, generative AI customers can benefit from more flexibility, security, and reliability, as well as access to the latest technologies and innovations.

How businesses and users can benefit from this trend?

1. Enhanced Security

- Anomaly Detection

Using generative AI, cloud platforms can detect anomalies in security logs and network traffic. Generative models can learn normal patterns of behavior and identify deviations that may indicate security threats or intrusions.

Biometric Authentication

Cloud-based applications can benefit from generative AI’s ability to produce and evaluate specific biometric characteristics, such as voice or facial recognition, which increases the reliability and security of user authentication.

2. Personalization

- Content Generation and Recommendation

Personalized content, such as ads, articles, or product suggestions, can be generated by generative AI for cloud services, based on user behavior and preferences. This improves the personalization of content delivery, leading to higher user engagement and satisfaction.

- Virtual Assistants and Chatbots

Generative models allow cloud-based virtual assistants and chatbots to use generative AI to deliver more natural and context-relevant interactions. These systems can understand user queries and generate responses that sound like human speech, resulting in a more personalized and intuitive user experience.

3. Innovation

- Product Design and Creativity

Generative AI in the cloud can help businesses in design-intensive industries with creative tasks such as product design, image synthesis, and artistic content creation. Based on specified criteria, generative models can generate visual elements and suggest novel ideas to designers.

- Drug Discovery and Healthcare

Cloud platforms allow generative models to support generative AI applications in discovering and personalizing drugs and treatments for individual patients, creating more innovative and effective healthcare results.

4. Language Translation and Localization

- Multilingual Support

Generative models can use cloud-based language translation services to support generative AI in improving the translations. They can learn the context and the language specifics, generating more smooth and suitable translations for users in various languages.

5. Supply Chain Optimization

- Demand Forecasting

By using cloud-based supply chain management, businesses can utilize generative AI to forecast demand more precisely. Generative models can examine past data and outside influences to produce predictions, assisting businesses in optimizing stock levels and improving overall supply chain performance.

6. Financial Fraud Detection

- Pattern Recognition in Transactions

Cloud-based financial services allow generative models to assist generative AI in improving security in transactions. They can identify irregular patterns that signal fraud, enabling real-time avoidance and identification of financial fraud. By using cloud-based platforms as a service, generative AI can also leverage the scalability, availability, and efficiency of the cloud, as well as access to various tools and frameworks.

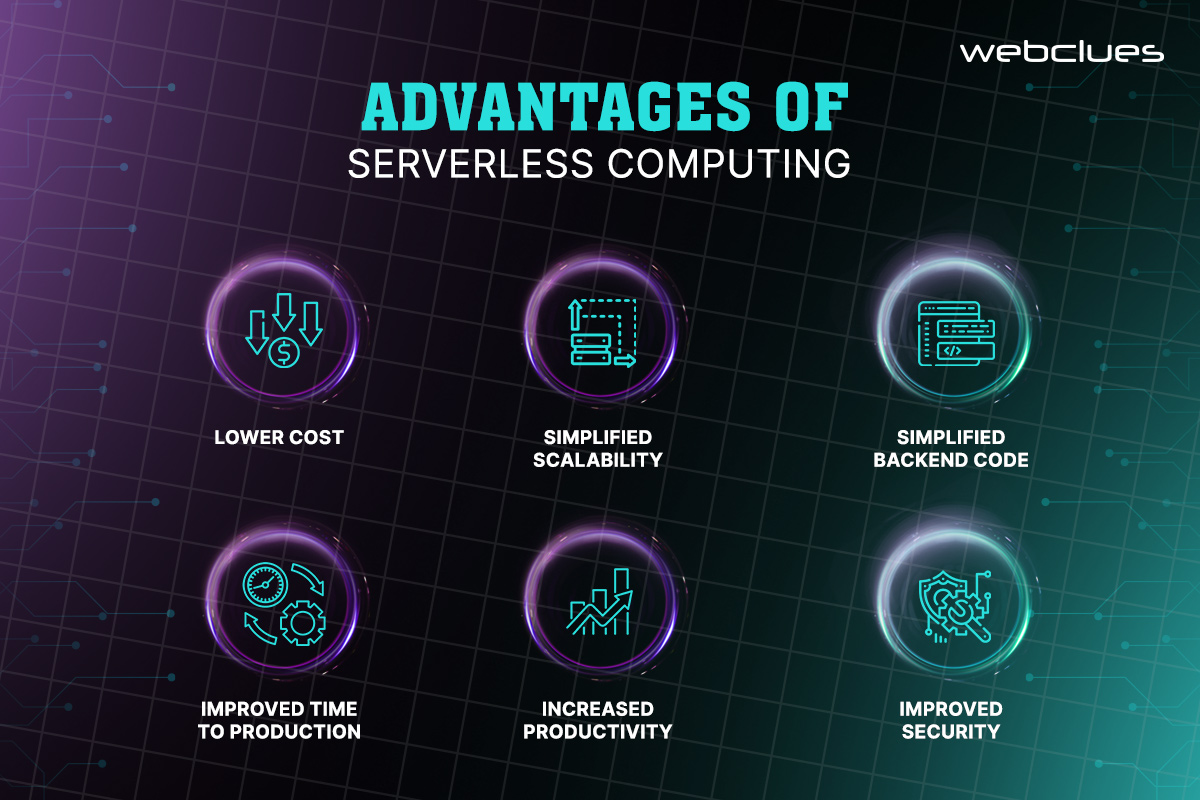

Trend 3- Serverless and Pay-As-You-Go Cloud

With the Serverless and Pay-As-You-Go Cloud trend, the way organizations deploy and manage their applications in the cloud has changed dramatically. Previously, deploying and maintaining applications required the provisioning of servers with fixed capacities, often causing underutilized resources and higher costs. However, this approach has been transformed by the emergence of serverless computing and pay-as-you-go cloud services.

Serverless computing allows developers to focus on code and functions, rather than servers. The cloud provider handles the infrastructure management, including scaling, patching, and operations. This makes the applications more adaptable and effective.

The pay-as-you-go model is a good match for serverless computing, as it provides a billing method that is flexible and cost-effective. Organizations are charged for the resources they consume, such as computing power, storage, and data transfer. This way, they avoid spending money on infrastructure before they need it and can adjust resources according to demand, saving costs and allocating resources efficiently.

What are the best practices for serverless and pay-as-you-go cloud computing?

- Choose the right cloud provider and platform

When it comes to serverless and pay-as-you-go cloud computing, different providers and platforms may offer different features, pricing, and support for serverless applications. To ensure that you choose the best option for your needs and goals, it is important to compare and evaluate the available options. You should also consider the compatibility, integration, and migration of your existing applications and data with the chosen provider and platform.

- .Design your applications for serverless architecture

Serverless computing requires a different approach to application design than traditional server-based architecture. To design applications for this environment, you should create a collection of independent and stateless functions that can be triggered by events like HTTP requests, database changes, or message queues. Additionally, you should follow the principles of microservices, such as loose coupling, high cohesion, and modularity. Finally, you should use the appropriate services and tools provided by the cloud provider and platform to enhance your application functionality, such as authentication, storage, and analytics.

- Monitor and optimize your resource usage and costs

With pay-as-you-go cloud computing, optimizing your resource usage and costs is key. To achieve this, you should track and measure your resource consumption, such as CPU time, memory, and network bandwidth, and identify any anomalies or inefficiencies. You should also optimize your code and configuration to reduce the cold start time, latency, and errors of your functions. Finally, you should use the billing and cost management tools provided by the cloud provider and platform to estimate and control your spending and avoid any surprises.

What are the challenges of serverless and pay-as-you-go cloud computing?

- Security and Compliance

Serverless cloud computing can pose security and compliance risks, as you have less control and visibility over the infrastructure and data. To address these risks, it is important to ensure that your cloud provider and platform comply with the relevant regulations and standards, such as GDPR, HIPAA, and PCI DSS. You should also implement proper security measures, such as encryption, authentication, and authorization, to protect your data and functions from unauthorized access or malicious attacks.

- Testing and debugging

Testing and debugging can be more difficult when using serverless cloud computing, as you have less access and information about the underlying infrastructure and environment. To address these challenges, it is important to use the appropriate tools and frameworks to test and debug your functions locally and remotely and simulate the events and conditions that trigger your functions. You should also monitor and log your function’s performance, errors, and exceptions, and use the feedback to improve your code and configuration.

- Vendor lock-in and portability

When using serverless and pay-as-you-go cloud computing, you may become more dependent on the cloud provider and platform, as they may use proprietary or incompatible technologies and services. It is important to weigh the convenience and functionality of the cloud provider and platform against the flexibility and portability of your applications and data. Additionally, you should plan for the possibility of switching or migrating to a different provider or platform, and evaluate the costs and challenges involved.

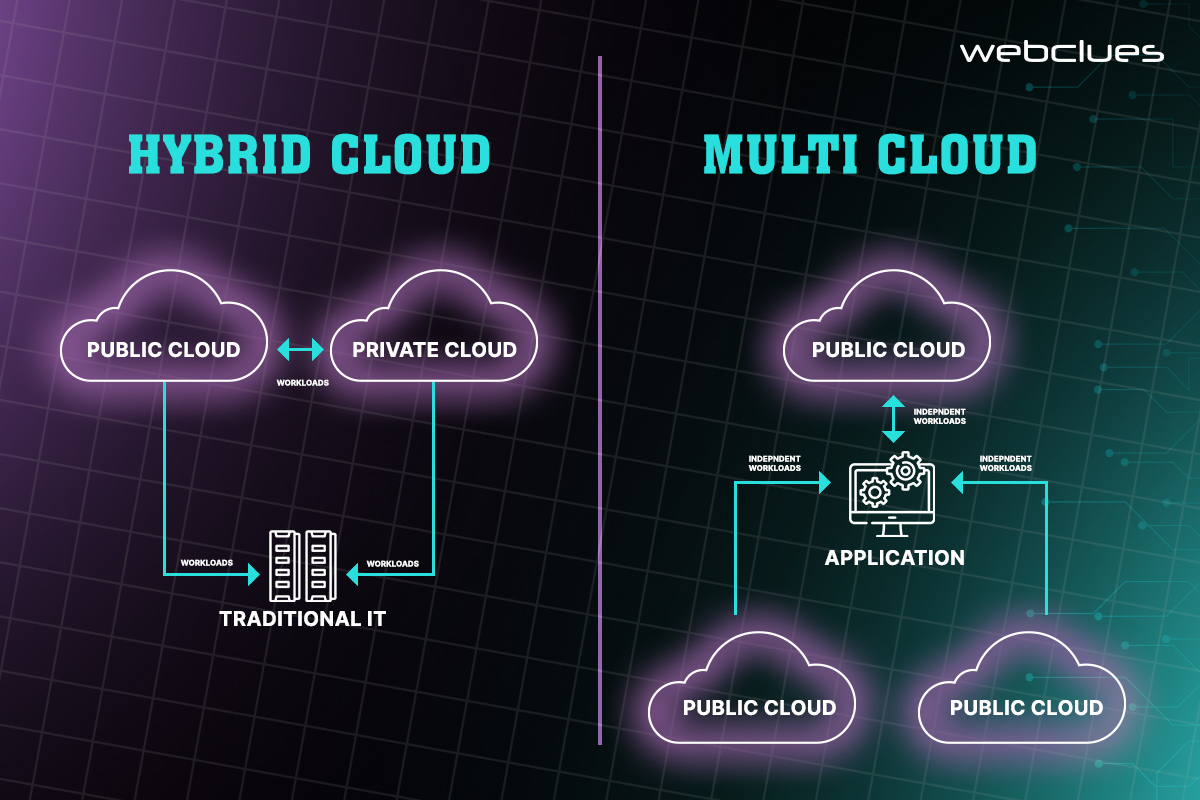

Trend 4- The Adoption of Multi-cloud and Hybrid Cloud Strategies

As cloud computing evolves rapidly, a major trend that organizations have followed more frequently in recent years is the use of multi-cloud and hybrid cloud strategies.

Multi-cloud is the term for using services from different cloud providers to fulfill specific business needs. This way, organizations do not rely on a single cloud platform but use the best features of different providers. This improves performance, increases redundancy, and reduces risks. This also allows flexibility, as businesses can select the most appropriate services for different parts of their operations.

An organization may use various cloud providers for different purposes, such as data storage, machine learning services, and specialized applications. This diversity helps avoid being stuck with one vendor, improves resilience, and provides the flexibility needed in today’s changing business environment.

A hybrid cloud, on the other hand, creates an integrated and customized computing environment by combining on-premises infrastructure with cloud services. This strategy lets businesses keep some crucial data and applications on-premises while using the cloud’s scalability and efficiency for other workloads.

A hybrid cloud setup allows smooth communication between on-premises data centers and cloud resources, which lets data and applications move easily between them. This approach is very useful for organizations with legal systems or strict regulatory requirements, as it gives the advantages of cloud computing without totally adopting a cloud-only model.

Why are they becoming more popular among businesses and users?

- Optimized Performance

Multi-cloud strategies allow organizations to optimize performance for specific workloads by selecting the most suitable services from different providers. This flexibility ensures that businesses can utilize the strengths of each cloud platform to achieve better overall performance.

- Reduced Vendor Lock-In

Businesses can avoid complete dependency on a single vendor by diversifying across multiple cloud providers. This reduces the risks associated with vendor lock-in, providing the freedom to switch providers or negotiate better terms. It also ensures that organizations are not constrained by the limitations of a single cloud ecosystem.

- Enhanced Resilience and Redundancy

Multi-cloud and hybrid cloud architectures ensure continuous availability and reliability by distributing workloads across various cloud platforms or combining on-premises resources with cloud services. This redundancy minimizes the impact of potential outages and offers improved resilience.

- Scalability and Resource Optimization

By adopting hybrid cloud solutions, organizations can utilize the elasticity of the cloud for variable workloads while retaining control over certain critical applications and data on-premises. This scalability ensures efficient resource utilization and cost-effectiveness.

- Compliance and Regulatory Considerations

Hybrid cloud solutions allow businesses to adhere to regulatory standards without sacrificing the benefits of cloud computing by keeping sensitive data on-premises while utilizing the cloud for non-sensitive workloads. This is particularly appealing to industries with strict regulatory requirements or compliance concerns.

- Strategic Flexibility

In today’s dynamic business environment, multi-cloud and hybrid cloud approaches provide strategic flexibility, enabling organizations to adapt to evolving business needs. These strategies allow businesses to integrate new technologies, adopt emerging cloud services, and respond to market changes with ease.

- Cost Optimization

The most cost-effective services for each specific task can be chosen by businesses to optimize costs. Additionally, the ability to scale resources up and down based on demand contributes to overall cost efficiency.

- Innovation and Technology Diversity

Multi-cloud strategies allow organizations to access a wide range of services offered by different cloud providers. This lets businesses utilize cutting-edge technologies and features provided by various platforms, fostering innovation.

- Improved Disaster Recovery

Disaster recovery capabilities are improved by the distributed nature of multi-cloud and hybrid architectures. In case of a failure in one cloud provider’s infrastructure, services can absolutely fail over to another, minimizing downtime and data loss.

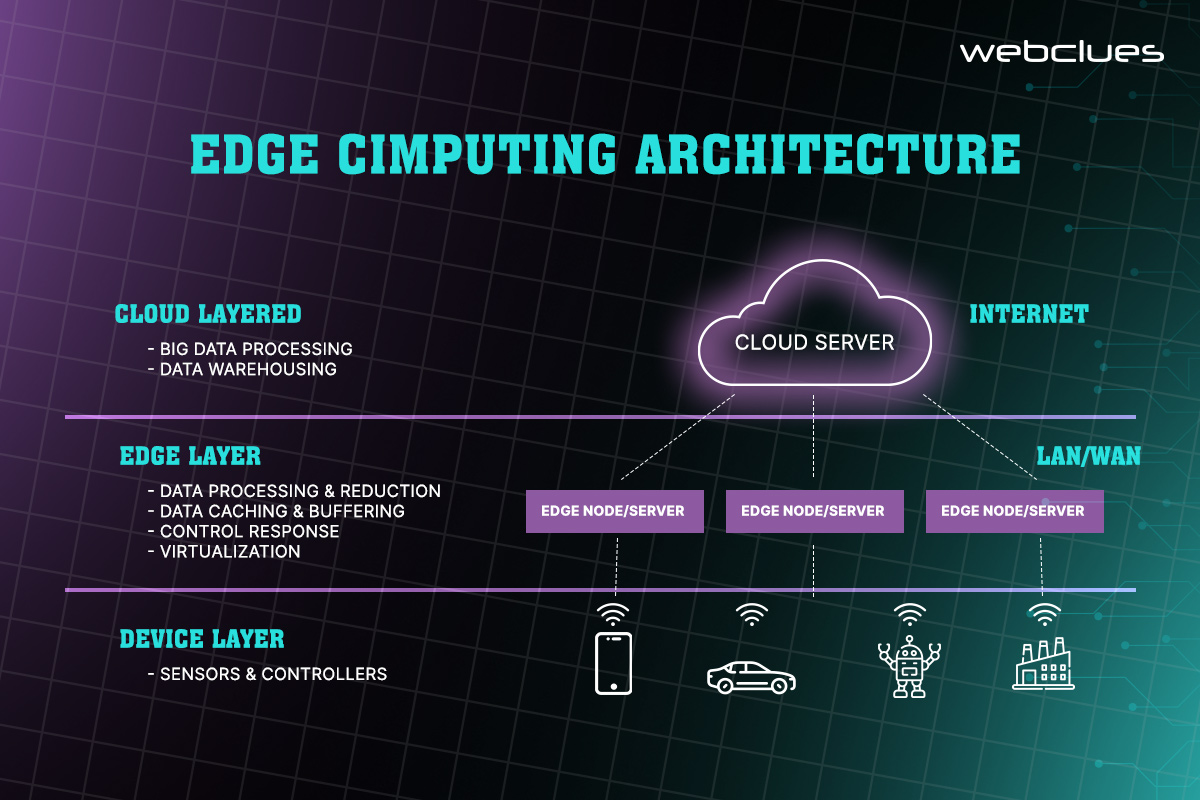

Trend 5- The advancement of edge computing

By bringing computation and data storage close to the source of data generation, edge computing is an important trend in the shifting world of technology. It does not use centralized data processing in distant data centers like traditional computing models. This minimizes latency, improves efficiency, and allows real-time processing of data.

Edge computing is based on the idea of placing computing resources such as servers, storage, and networking devices near the edge of the network, where data is generated. This allows faster data processing and analysis, which is beneficial for applications that demand low latency, high bandwidth, and quick response times.

When data needs to be processed rapidly, edge computing can deal with the challenges of cloud computing. Applications such as augmented reality, self-driving cars, and industrial IoT rely on real-time data analysis, and edge computing is essential for supporting these applications.

By transmitting only processed and relevant information, edge computing reduces the need for bandwidth. Edge computing is opening up new avenues for innovation and efficiency for various industries as this trend develops. This is changing the technology landscape and leading to a more interconnected and responsive future for smart cities, healthcare, manufacturing, and beyond.

How will it complement and reshape the cloud computing framework?

- Reduced Latency

By processing data closer to the point of generation, edge computing significantly reduces latency. This is especially important for applications that require real-time responsiveness, such as autonomous vehicles, remote healthcare monitoring, and augmented reality. Unlike cloud computing, which provides centralized processing, the proximity of edge computing minimizes the time it takes for data to travel to and from distant data centers, ensuring quicker response times.

- Bandwidth Optimization

Edge computing reduces the burden on network bandwidth by processing and filtering data locally, instead of transmitting large volumes of raw data to central cloud servers, only relevant and processed information is sent, leading to more efficient use of network resources. This optimization is particularly useful in situations where bandwidth is limited or expensive.

- Enhanced Security and Privacy

Processing sensitive data closer to its source helps to improve security and privacy. Critical data can be processed locally, reducing the risk associated with transmitting sensitive information over networks. This boost is especially important for industries like healthcare and finance, where data privacy and regulatory compliance are of utmost importance.

- Scalability and Flexibility

Edge and cloud computing can be combined to create a scalable and flexible infrastructure. Edge nodes can handle localized processing demands, while cloud resources can be utilized for more intensive computations and storage. This hybrid approach allows organizations to dynamically scale their computing resources based on the specific requirements of different tasks and applications.

- Resilience and Redundancy

The distribution of processing capabilities across multiple edge nodes improves the overall system’s resilience. In the event of a network failure or a localized issue, edge nodes can continue to operate independently, ensuring continued functionality. This optimization minimizes the impact of potential disruptions and improves overall system reliability.

- Optimized Cost Structure

By delegating specific processing tasks to edge devices, companies can reduce their cloud computing expenses. Edge computing allows for more efficient use of cloud resources by sending only selected data to the cloud for more complex analysis or long-term storage. This can result in cost savings, especially for applications with large datasets.

How is Edge Computing helping IoT applications and User Experiences?

- Smart Cities and Traffic Management

Real-time analysis of traffic cameras at intersections by edge devices can reduce latency and bandwidth requirements, leading to faster response times for adaptive traffic signal control based on real-time conditions.

- Healthcare Monitoring Devices

By processing data locally, edge devices can reduce the latency and bandwidth required for data transmission. This allows for real-time feedback of data produced by healthcare devices back to patients, leading to a faster recovery.

- Manufacturing and Industrial IoT

Edge computing allows for real-time processing of data, reducing latency and allowing faster decision-making. This can result in improved efficiency and productivity.

- Augmented Reality (AR) and Virtual Reality (VR)

Real-time processing of data can be enabled by edge computing, which helps reduce latency and improve the quality of the user experience.

- Retail and Customer Engagement

By reducing latency and bandwidth requirements, edge computing can produce faster response times and improved customer experiences.

- Autonomous Vehicles

The functioning of self-driving cars can be improved by edge computing, which reduces latency and enables real-time data processing.

Conclusion

Beyond 2025, the cloud will be more than a tech infrastructure, it will be a force of transformation for different industries. And, as we go on this incredible journey, we should be attentive, flexible, and creative. Moreover, the cloud computing future is not an endpoint, it’s an ongoing journey to a more connected, smart, and efficient digital era.

Want to take your business to the next level with advanced cloud computing services? Discover the endless opportunities with WebClues Infotech and start your journey to a successful future.

Reach out to us today and find out how our skills can transform your digital environment.

Build Your Agile Team

Hire Skilled Developer From Us

Harness the benefits of the latest trends in Cloud Computing.

Cloud computing and DevOps have been one of our fortes at Webclues. Our dedicated team can provide you with effective solutions tailored to your specific requirements.

Contact Today!Our Recent Blogs

Sharing knowledge helps us grow, stay motivated and stay on-track with frontier technological and design concepts. Developers and business innovators, customers and employees - our events are all about you.

Contact

Information

Whether you're building next door or across time zones, we stay close in ideas, in execution, and in support.

India

Ahmedabad

1007-1010, Signature-1,

S.G.Highway, Makarba,

Ahmedabad, Gujarat - 380051

Rajkot

1308 - The Spire, 150 Feet Ring Rd,

Manharpura 1, Madhapar, Rajkot, Gujarat - 360007

UAE

Dubai

Dubai Silicon Oasis, DDP,

Building A1, Dubai, UAE

USA

Atlanta

6851 Roswell Rd 2nd Floor, Atlanta, GA, USA 30328

New Jersey

513 Baldwin Ave, Jersey City,

NJ 07306, USA

California

4701 Patrick Henry Dr. Building

26 Santa Clara, California 95054

Australia

Queensland

120 Highgate Street, Coopers Plains, Brisbane, Queensland 4108

UK

London

85 Great Portland Street, First

Floor, London, W1W 7LT

Canada

Burlington

5096 South Service Rd,

ON Burlington, L7l 4X4

Let’s Transform Your Idea into Reality. Get in Touch